1936

| Alan Turing publishes “On Computable Numbers“, introducing the concept of a “Turing machine“: an abstract representation of a computing device that explores the scope and limits of what can be computed. |

1943

| Researchers created the first modern computer: an electronic, digital, programmable machine. This gave rise to fundamental questions: Can machines think? Can they be intelligent? Can they ever be intelligent? |

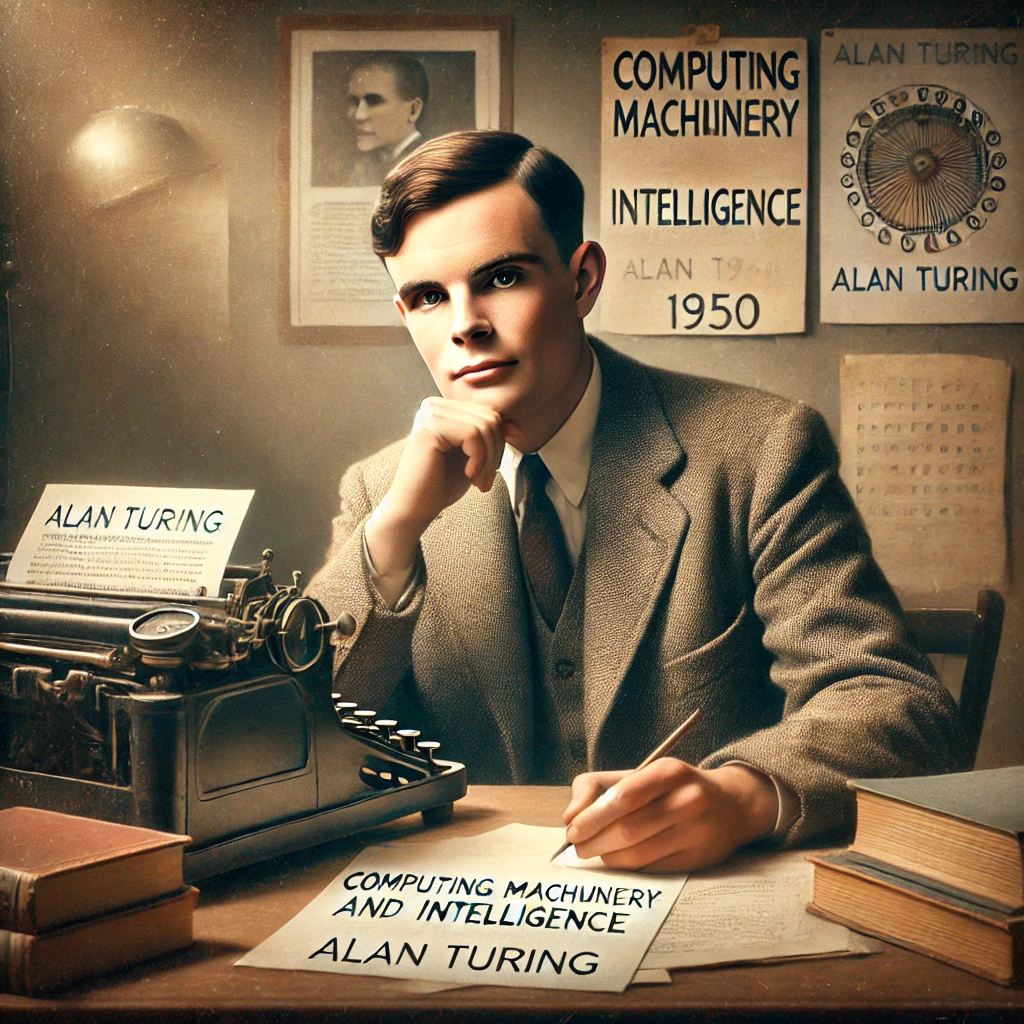

1950

|

Alan Turing, in the article “Computing Machinery and Intelligence” proposes to leave aside the problem of machine intelligence because what matters is not the mechanism but the manifestation of intelligence. Turing argues: “if a machine operates so efficiently that observers are unable to distinguish its behavior from that of a human being, then the machine must be considered intelligent”. Turing proposes the famous “Turing Test” according to which a computer capable of formulating responses indistinguishable from those that a human being would give, should be considered an intelligent machine. |

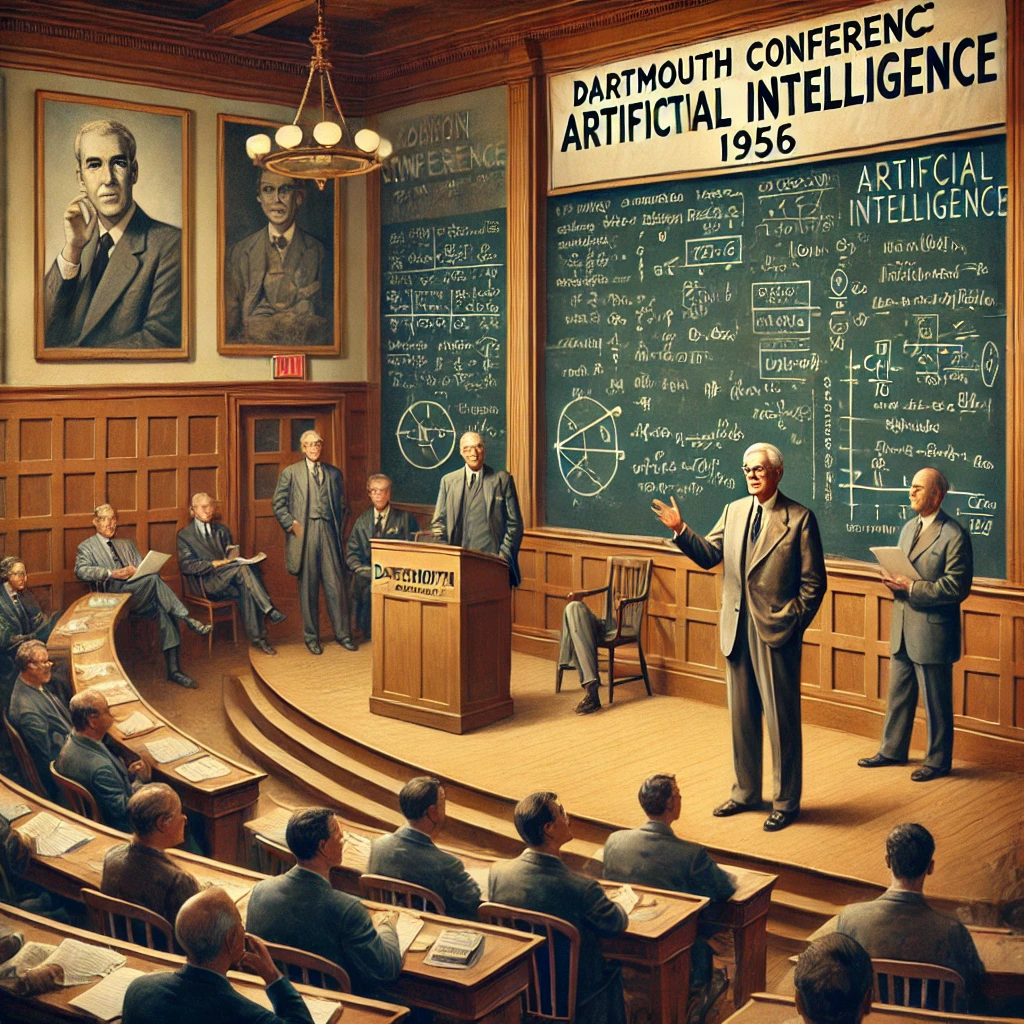

1956

| Computer scientist and mathematician John McCarthy hosts a conference at Dartmouth College to explore the possibility of creating thinking machines. During the event, he coined the term “artificial intelligence,” defining it as the ability of machines to perform tasks characteristic of human intelligence. |

1966

| Joseph Weizenbaum creates ELIZA, one of the leading chatbots (virtual assistants). The program imitates the understanding and attentive attitude of a therapist, which is particularly convincing. |

1968

| The HAL 9000 computer, the protagonist of Stanley Kubrick’s film “2001: A Space Odyssey”, written in collaboration with Arthur C. Clarke, will profoundly influence the collective imagination on artificial intelligence. |

1969

| Marvin Minsky and Seymour Papert publish “Perceptrons”, an analysis of the limits and potential of neural networks, models inspired by the human brain that process information through artificial “neurons”. The book highlights important limitations, such as the inability to solve nonlinear problems, and contributes to the first “AI winter,” but also lays the foundation for future research on more advanced neural networks. |

Seventies

| The decade of the so-called ‘AI winter’. Following the publication of two very pessimistic reports, commissioned by the US and British governments, public funding for research in the field of artificial intelligence was drastically reduced. The two key documents were: 1966, “Preliminary Discussion of the Artificial Intelligence Problem”, prepared by ARPA (Advanced Research Projects Agency, later known as DARPA). 1973, “The Lighthill Report”, commissioned by the British government. |

1980

| The first national conference of the American Association for Artificial Intelligence (AAAI) is held at Stanford University in the United States, an event that marks an important step in the diffusion and recognition of AI as a scientific discipline. |

1997

|

IBM‘s Deep Blue supercomputer defeats world chess champion Garry Kasparov, marking a major milestone in artificial intelligence. In one of the games, Deep Blue makes an unexpected move that leaves Kasparov deeply disoriented. This move, initially interpreted as an advanced strategy, could have been the result of a calculation error. However, the gesture demonstrated how a logic devoid of emotion and preconceptions can surprise even the most expert players, opening the debate on a possible “superior logic” that surpasses human logic. |

2011

| IBM Watson wins the general knowledge quiz show Jeopardy! against the two best champions on the show. The game involves asking the correct question based on a given answer. Artificial intelligence demonstrated how a machine could interpret and respond to a game based on general knowledge and language comprehension, a skill that requires complex skills. |

2014

| The Eugene Goostman chatbot developed by Russian Vladimir Veselov (based in the United States), and Ukrainian Eugene Demchenko (based in Russia), passes a version of the Turing Test. Eugene Goostman is designed to simulate a thirteen-year-old Ukrainian boy. Although greeted with skepticism by the scientific community, this result has brought media attention to the potential of chatbots and conversational artificial intelligence. |

2016

|

DeepMind‘s AlphaGo defeats Go world champion Lee Sedol in a series of historic games. Go is an ancient strategic game, originating from the Far East, known for its complexity and unpredictability, considered far superior to that of chess. AlphaGo’s victory represents an extraordinary achievement for artificial intelligence, achieved thanks to the use of deep neural networks and reinforcement learning, which allow AI to tackle complex and unpredictable problems. An iconic moment of the challenge was the ‘Move 37’ in the second game: a move that seemed illogical to human players, but extraordinarily effective, which left Lee Sedol and the Go experts deeply surprised. This demonstrated how AI can find innovative solutions that elude even the best human players. However, Lee Sedol had his moment of glory in the fourth game, when he surprised AlphaGo with ‘Move 78’, forcing the system to make an error and winning the game. This victory proved that despite the advances in AI, human creativity and intuition still hold a unique value. The defeat against AlphaGo was an emotionally charged experience for Lee Sedol, who reflected on the nature of the game and the future of competition between humans and machines. The challenge between AlphaGo and Lee Sedol became symbolic, highlighting the strengths and limitations of human and artificial intelligence. |

2018

|

Google’s AI, Duplex, can book a hair salon appointment and ask for directions to a restaurant by speaking on the phone like a normal customer. Duplex uses advanced natural language understanding and generation technology, including elements such as pauses and vocal inflections, to make the conversation sound incredibly lifelike. This demonstration showed the capabilities of AI to interact in everyday contexts while also raising ethical questions about transparency in the use of machines in human interactions. |

2020

|

OpenAI releases GPT-3, an AI based on a large language model (LLM), which, at the time of its launch, was the most powerful language model ever created, with 175 billion parameters. GPT-3 has shown amazing capabilities in generating text, answering questions, and producing creative content, opening up new vistas for AI. |

2022

|

OpenAI launches DALL-E 2, an AI model that can generate images from text descriptions, showing a new frontier of AI-assisted creativity. That same year, ChatGPT is released, a variant of GPT-3 optimized for conversational interactions, capable of natural dialogue and providing detailed answers on a wide range of topics. |

2023

|

OpenAI releases GPT-4, an even more advanced and sophisticated version of its language model, capable of understanding and generating text with greater precision, contextuality and coherence. GPT-4 stands out for its ability to handle more complex conversations, answer technical questions with greater accuracy and interpret linguistic nuances that improve interactions with users. |